Author Rie Kudan received a prestigious Japanese literary award for her book, The Tokyo Tower of Sympathy, and then disclosed that 5% of her book was written word-for-word by ChatGPT (Choi & Annio, 2024).

Would you let your students submit a paper where 5% of the text was written by ChatGPT?

What about if they disclosed their use of ChatGPT ahead of time?

Or, if they cited ChatGPT as a source?

The rise of generative AI tools like ChatGPT, Copilot, Claude, Gemini, DALL-E, and Meta AI has created a pressing new challenge for educators: defining academic integrity in the age of AI.

As educators and students grapple with what is allowed when using generative AI (GenAI) tools, I have compiled five tips to help you design or redesign academic integrity statements for your syllabus, assignments, exams, and course activities.

1. Banning GenAI tools is not the solution

Many students use GenAI tools to aid their learning. In a meta-analysis on the use of AI chatbots in education, Wu and Yu (2023) found that AI chatbots can significantly improve learning outcomes, specifically in the areas of learning performance, motivation, self-efficacy, interest, and perceived value of learning. Additionally, non-native English speakers and students with language and learning disabilities often turn to these tools to support their thinking, communication, and learning.

Students also need opportunities to learn how to use, and critically analyze, GenAI tools in order to prepare for their future careers. The number of US job postings on LinkedIn that mention “GPT” has increased 79% year over year and the majority of employers believe that employees will need new skills, including analytical judgment about AI outputs and AI prompt engineering, to be prepared for the future (Microsoft, 2023). The Modern Language Association of America and Conference on College Composition and Communication (MLA-CCCC) Joint Task Force on Writing an AI recently noted that:

Refusing to engage with GAI helps neither students nor the academic enterprise, as research, writing, reading, and other companion thinking tools are developing at a whirlwind rate and being integrated into students’ future workplaces from tech firms to K–12 education to government offices. We simply cannot afford to adopt a stance of complete hostility to GAI: such a stance incurs the risk of GAI tools being integrated into the fabric of intellectual life without the benefit of humanistic and rhetorical expertise. (pp. 8-9)

Ultimately, banning GenAI tools in a course could negatively impact student learning and exacerbate the digital divide between students who have opportunities to learn how to use these tools and those who do not (Trust, 2023). And, banning these tools won’t stop students from using them–when universities tried to ban TikTok, students just used cellular data and VPNs to circumvent the ban (Alonso, 2023).

However, you do not have to allow students to use GenAI tools all the time in your courses. Students might benefit from using these tools on some assignments, but not others. Or for some class activities, but not others. It is up to you to decide when these tools might be allowed in your courses and to make that clear to your students. Which leads to my next point…

2. Tell your students what YOU allow

Every college and university has an academic integrity/honesty or academic dishonesty statement. However, these statements are either written so broadly that there can be different interpretations of the language, or these statements indicate that the responsibility of determining what is allowable depends on the instructor, or both!

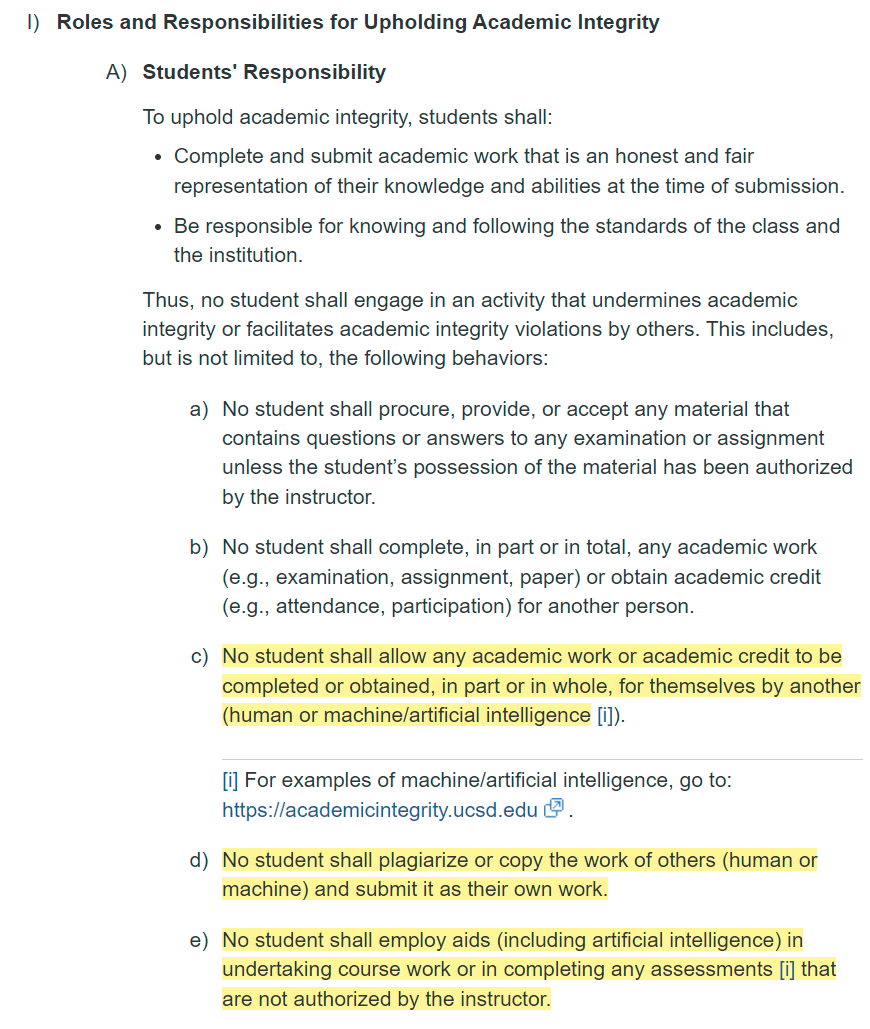

Take a look at UC San Diego’s Academic Honesty Policy (2023) (highlights were added for emphasis).

While this policy is detailed and specific, there is still room for interpretation of the text; and the responsibility of determining whether students can use GenAI tools as a learning aid (section “e”) relies solely on the instructor.

Keeping the UC San Diego academic honesty policy in mind, consider the following:

A student prompted Gemini to rewrite their text to improve the quality of their writing and submitted the AI-generated version of their text.

A student used ChatGPT to write their conclusion word-for-word, but they cited ChatGPT as a source.

A student prompted ChatGPT to draft sentence starters for each paragraph in their midterm paper.

A student used ChatGPT as an aid by prompting it to summarize course readings and make them easier to understand.

Which of these examples is a violation of the policy? This is up to you to determine based on your interpretation of the policy.

Now, think about your students. Some students take three, four, five, and even six classes a semester. Each class is taught by a different instructor who might have their own unique interpretation of the university’s academic integrity policy and a different perspective regarding what is allowable when it comes to GenAI tools and what is not.

Unfortunately, most instructors do not make their perspectives regarding GenAI tools clear to students. This leaves students guessing what is allowed in each course they take and if they guess wrong, they could fail an assignment, fail a course, or even get suspended – these are devastating consequences for a student who is unsure about what is allowed when it comes to using GenAI tools for their learning because their instructors do not make it clear to them.

Ultimately, it is up to you, as the instructor, to determine what you allow, and then to let your students know! Write your own GenAI policy to include in your syllabus. Write your own GenAI use policies for assignments, exams, or even class activities. And then, talk with students about these policies and clarify any confusion they might have.

3. Use the three W’s to tell students what you allow

What GenAI tools are allowed?

When are GenAI tools allowed (or not allowed)?

Why are GenAI tools allowed (or not allowed)?

The 3 W’s can be used as a model to write your academic integrity statement in a clear and concise manner.

Let’s start with the first W: What GenAI tools are allowed?

Will you allow your students to use AI text generators? AI image creators? AI video, speech, and audio producers? What about Grammarly? Khanmigo? Or, GenAI tools embedded into Google Workspace?

If you do not clarify what GenAI tools are allowed, students might end up using an AI-enhanced tool, like Grammarly, and be accused of using AI to cheat because they did not know that when you said “No GenAI tools” you meant “No Grammarly, either” (read: She used Grammarly to proofread her paper. Now she’s accused of ‘unintentionally cheating.’).

Please do not put students in a situation of guessing what GenAI tools are allowed or not. The consequences can be dire and students deserve the transparency.

The next W is When are GenAI tools allowed (or not allowed)?

If you simply list what GenAI tools you allow, students might think it is okay to use the tools you listed for every assignment, learning activity, and learning experience in your class.

Students need specific directions for when GenAI tools can be used and when they cannot be used. Do you allow students to use GenAI tools on only one assignment? Every assignment? One part of an assignment? Or, what about for one aspect of learning (e.g., brainstorming) but not another (e.g., writing)? Or, for one class activity (e.g., simulating a virtual debate) but not another (e.g., practicing public speaking by engaging in a class debate)?

To determine when GenAI tools could be used in your classes, you might start with the learning outcomes for an activity or assessment and then identify how GenAI tools might support or subvert these outcomes (MLA-CCCC, 2024). When GenAI tools support, enhance, or enrich learning, it might be worthwhile to allow students to use these tools. When GenAI tools take away from or replace learning, you might tell students not to use these tools.

Making it clear when students can and cannot use GenAI tools will eliminate any guesswork from students and reduce instances of students using GenAI tools when you did not want them to.

The final W is Why are GenAI tools allowed (or not allowed)?

Being transparent about why GenAI tools are allowed or are not allowed helps students understand your reasoning and creates a learning environment where students are more likely to do what you ask them to do.

In the case of writing, for example, you might allow students to use GenAI tools to help with brainstorming ideas, but not with writing or rewriting their work because you believe that the process of putting pen to paper (or fingers to keyboards) is essential for deepening understanding of the course content. Telling students this will give them a clearer sense of why you are asking them to do what you are asking them to do.

If you simply state: “Do not use GenAI tools during your writing process” students might wonder, Why? and might very well use these tools exactly how you asked them not to because they were not given a reason why not to.

To sum up, the three W’s model brings transparency into teaching and learning and makes it clear and easy for students to understand when, where, and why they can use GenAI tools. This eliminates the guesswork from students, and reduces potential fears, anxieties, and stressors about the use of these tools in your courses.

You can use the thre W’s as a model for crafting your academic integrity statement for your syllabus and also as a model for clarifying AI use in an assignment (see the image below), on an exam, or during a class activity.

4. Clarify how you will identify AI-generated work and what you will do about it

Even when you provide a detailed AI academic integrity policy and increase transparency around the use of GenAI tools in your courses, students may still use these tools in ways that you do not allow.

It is important to let students know how you plan to identify AI-generated work.

Will you use an AI text detector? (Note that these tools are notoriously unreliable, inaccurate, and biased against non-native English speakers and students with disabilities; Liang et al., 2023; Perkins et al., 2024; Weber-Wulff et al., 2023)

Will you simply be on the lookout for text that looks AI-generated? If so, what will you look for? A change in writing voice and tone? Overuse of certain phrases like “delve”? A Google Docs version history where it appears as though text was copied and pasted in all at once? (see Detecting AI-Generated Text: 12 Things to Watch For)

Keep in mind that your own assumptions and biases might negatively impact certain groups of students as you seek to identify AI-generated work. The MLA-CCCC Joint Task Force (2024) noted that “literature across a number of disciplines has shown that international students and multilingual students who are writing in English are more likely to be accused of GAI-related academic misconduct” both because “GAI detectors are more likely to flag English prose written by nonnative speakers” and “suspicions of misuse of GAI are often due to complex factors, including culture, context, and unconscious ‘native-speakerism’ rather than actual misconduct” (p. 9).

Also, consider what happens if a student submits content that looks or is identified by a detector as AI-generated. Will they automatically fail the assignment? Need to have a conversation with you? Need to prove their knowledge to you in another way (e.g., oral exam)? Be referred to the Dean of Students?

Whatever you decide, being upfront about your expectations can foster a culture of trust between you and your students, and it might even deter students from using the tools in ways that you do not allow them to.

5. Consider whether you will allow students to cite GenAI tools as a source

One final point to consider as you are writing your academic integrity statement is whether students should be allowed to cite GenAI tools as a source.

Many college and university academic integrity/honesty statements indicate that as long as the student cites their sources, including GenAI tools, they are not violating academic integrity. AI syllabus policies, too, often state that students can use GenAI tools as long as they cite them.

But, should students really be encouraged to cite GenAI tools as a source?

Consider, for example, that many of the popular GenAI tools were designed by stealing tons of copyrighted data from the Internet. The companies that created these tools “received billions of dollars of investment while using copyrighted work taken without permission or compensation. This is not fair” (Syed, 2023).

While several companies are currently being sued for using copyrighted data to make their GenAI tools, in many cases, artists, authors, and other individuals whose work has been used without their permission to train these tools are losing their cases because of US copyright law and fair use. GenAI companies are arguing that their tools transform the copyrighted data they scraped from the Internet in a way that falls under fair use protections. However, a study found that large language models, like ChatGPT, sometimes generate text of over 1,000 words long that has been copied word-for-word from the original training data (McCoy et al., 2023) – making ChatGPT a plagiarism machine!

Consider also that GenAI tools can make up (“hallucinate”) content and present harmful and biased information. Do you want students to cite information from a tool that is not designed with the intent of providing factual information?

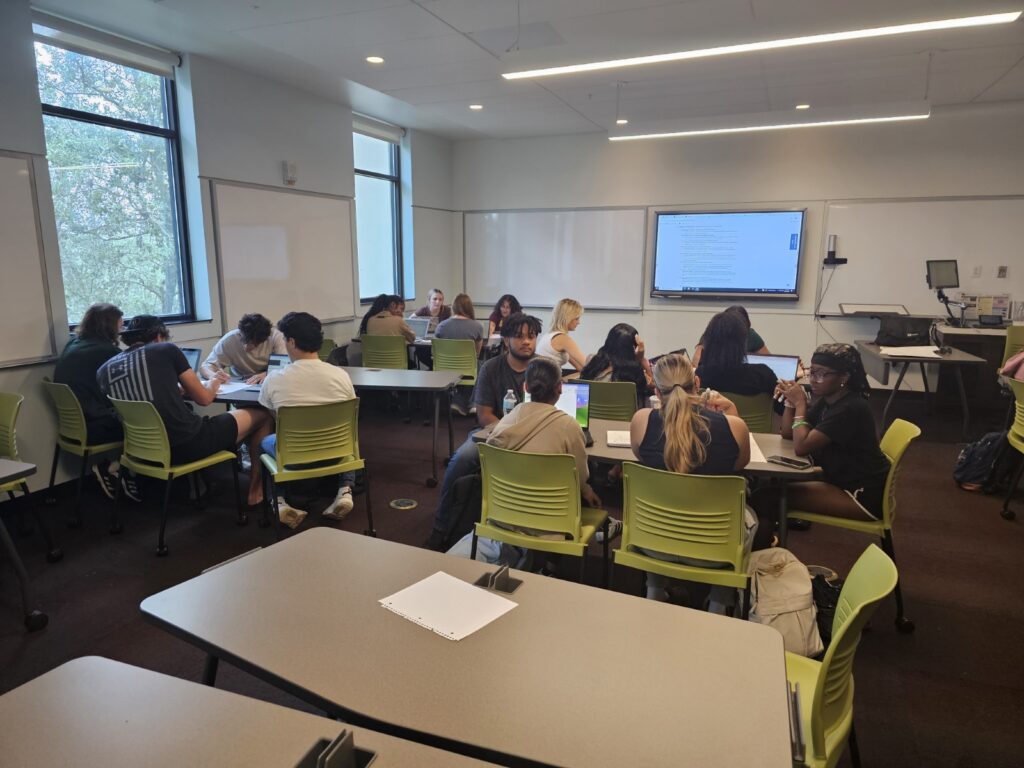

In a recent class activity, I asked my students (future educators) to write their own AI policy statements. Before they wrote their statements, I explained how GenAI tools were designed by scraping copyrighted data from the Internet and then they interrogated a GenAI tool by asking it at least 10 questions about whether it violated intellectual property rights. Across the board, my students decided that citing GenAI tools is not allowed and that they want their future students to cite an original source instead.

It is up to you whether you allow students to cite GenAI as a source or not. The most important thing is to be transparent with your students about whether you allow them to cite GenAI tools as a source; and if you do, let them know how much text you would allow them to copy word-for-word into their work as long as they cite a GenAI tool.

So, this returns us back to the question at the start: Would you let your students submit a paper where 5% of the text was written by ChatGPT…as long as they cited ChatGPT as a source?

GenAI Disclosure: The author used Gemini and ChatGPT 3.5 to assist with revising text to improve the quality of the writing. All text was originally written by the author, but some of the text was revised based on suggestions from Gemini and ChatGPT 3.5.

Author Bio

Torrey Trust, PhD, is a professor of learning technology in the Department of Teacher Education and Curriculum Studies in the College of Education at the University of Massachusetts Amherst. Her work centers on the critical examination of the relationship between teaching, learning, and technology; and how technology can enhance teacher and student learning. Dr. Trust has received the University of Massachusetts Amherst Distinguished Teaching Award (2023), the College of Education Outstanding Teaching Award (2020), and the ISTE Making IT Happen Award (2018), which “honors outstanding educators and leaders who demonstrate extraordinary commitment, leadership, courage and persistence in improving digital learning opportunities for students.”

References

Alonso, J. (2023, January 19). Students and experts agree: TikTok bans are useless. Inside Higher Ed. https://www.insidehighered.com/news/2023/01/20/university-tiktok-bans-cause-concern-and-confusion

Choi, C. & Annio, F. (2024, January 19). The winner of a prestigious Japanese literary award has confirmed AI helped write her book. CNN. https://www.cnn.com/2024/01/19/style/rie-kudan-akutagawa-prize-chatgpt/index.html

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. Patterns, 4(7).

McCoy, R. T., Smolensky, P., Linzen, T., Gao, J., & Celikyilmaz, A. (2023). How much do language models copy from their training data? Evaluating linguistic novelty in text generation using raven. Transactions of the Association for Computational Linguistics, 11, 652-670.

Microsoft. (2023). Work trend annual index report. https://www.microsoft.com/en-us/worklab/work-trend-index/will-ai-fix-work/

Modern Language Association of America and Conference on College Composition and Communication. (2024). Generative AI and policy development: Guidance from the MLA-CCCC task force. https://cccc.ncte.org/mla-cccc-joint-task-force-on-writing-and-ai

Perkins, M., Roe, J., Vu, B. H., Postma, D., Hickerson, D., McGaughran, J., & Khuat, H. Q. (2024). GenAI detection tools, adversarial techniques and implications for inclusivity in higher education. arXiv preprint. https://arxiv.org/ftp/arxiv/papers/2403/2403.19148.pdf

Syed, N. (2023, November 18). ‘Unmasking AI’ and the fight for algorithmic justice. The Markup. https://themarkup.org/hello-world/2023/11/18/unmasking-ai-and-the-fight-for-algorithmic-justice

Trust, T. (2023, August 2). Essential considerations for addressing the possibility of AI-driven cheating, part 1. Faculty Focus. https://www.facultyfocus.com/articles/teaching-with-technology-articles/essential-considerations-for-addressing-the-possibility-of-ai-driven-cheating-part-1/

UC San Diego. (2023). UC San Diego academic integrity policy. https://senate.ucsd.edu/Operating-Procedures/Senate-Manual/Appendices/2

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., … & Waddington, L. (2023). Testing of detection tools for AI-generated text. International Journal for Educational Integrity, 19(1), 26.

Wu, R. & Yu, Z. (2023). Do AI chatbots improve students learning outcomes? Evidence from a meta-analysis. British Journal of Educational Technology, 55(1), 10-33.

The post Five Tips for Writing Academic Integrity Statements in the Age of AI appeared first on Faculty Focus | Higher Ed Teaching & Learning.

Author Rie Kudan received a prestigious Japanese literary award for her book, The Tokyo Tower of Sympathy, and then disclosed that 5% of her book was written word-for-word by ChatGPT (Choi & Annio, 2024). Would you let your students submit a paper where 5% of the text was written by ChatGPT? What about if they

The post Five Tips for Writing Academic Integrity Statements in the Age of AI appeared first on Faculty Focus | Higher Ed Teaching & Learning. Teaching with Technology Faculty Focus | Higher Ed Teaching & Learning